The True Extent of the 'Responsible AI' Problem

AI-focused leaders are aware that a 'responsible AI' problem exists, but there’s still no consensus on what responsibilities enterprises have around AI use

A biased algorithm overcharges a subset of your customers, triggering a consumer rights scandal; a self-driving car avoids an accident and maneuvers to save the driver, but kills two pedestrians.

The thorny ethical implications of the arrival of AI are rich and varied. While most enterprises adopting AI technologies may not be faced with life or death choices, all of them are at risk of reputational damage.

“If you believe that you want to give back to the world, then don't let your AI take from it”

Cortnie Abercrombie, Founder and CEO, AI Truth

Our second annual survey of 100 C-level data, analytics and AI executives for data analytics company FICO shows that many enterprises are now paying more attention to the question of AI ethics. In fact, 21% have made AI ethics a core element of their organizations’ business strategies. Another 30% say they will do so within 12 months.

But our research also shows there is little consensus about what responsibilities businesses have in this area.

“I think there's now much more awareness that things are going wrong,” says Cortnie Abercrombie, Founder and CEO of responsible AI advocacy group AI Truth. “But I don't know that there is necessarily any more knowledge about how that happens.”

Awareness of Responsible AI Issues Remains Patchy

The issues that underpin AI ethics range from biased datasets and unethically sourced data to unfair uses for AI systems and a lack of accountability when things go wrong.

Our survey respondents report that awareness of these topics is highest among risk and compliance staff, predicting that more than 80% of staff in these roles have a complete or partial understanding of the problem. They also rated awareness in IT and data and analytics teams highly, at well over 70%.

For other stakeholders the picture is patchy at best. Respondents say more than half of shareholders have a poor understanding of AI ethics, and more than a third say the same about board members and the wider executive team.

That suggests possible tensions between leaders – who may want to get models into production quickly – and stakeholders such as data scientists, who want to take the time to get things right.

“I've seen a lot of what I call abused data scientists,” Abercrombie says. “Basically, they're being told by the person who funds the AI projects, ‘Look, you've got to get this done in this timeframe, and I've only got this much money.’”

Also of concern is the low awareness our respondents believe customers have around AI ethics. Just 32% of respondents believe their customers have a full understanding of the ethical issues around how AI impacts their lives.

“I think that would-be customers need to be able to have information that allows them to make an informed choice about which kinds of products and which kinds of companies they want to engage with,” says Mark Caine, Lead: AI and ML at the World Economic Forum.

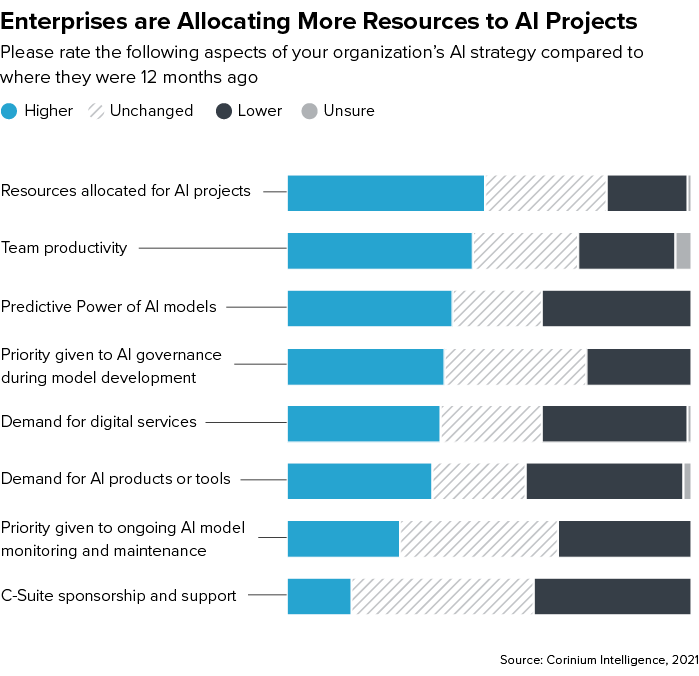

Of the enterprises we surveyed, 49% report an increase in resources allocated to AI projects over the past 12 months and a further 30% say their AI budgets and headcounts have remained level, making the need to address these concerns all the more urgent.

The business community is committed to driving transformation through AI-powered automation. If key stakeholders aren’t even aware of the risks, some fear effective self-regulation is unlikely.

There’s No Consensus on What ‘Ethical’ Means

Our research also shows that there is no consensus among executives about what a company’s responsibilities should be when it comes to AI.

“At the moment, companies decide for themselves whatever they think is ethical and unethical, which is extremely dangerous,” argues Ganna Pogrebna, Lead for Behavioral Data Science at The Alan Turing Institute. “Self-regulation does not work.”

We had respondents rate a range of AI uses according to three options. These were: 1) No responsibilities beyond ensuring regulatory compliance. 2) AI systems must meet the basic ethical standards of fairness, transparency and being in customers’ best interests. Or, 3) AI systems must meet those basic ethical standards and have explainable outputs.

Most agree that AI systems for data ingestion must meet basic ethical standards and that systems used for back-office operations must also be explainable. But this may partly reflect the challenges of getting staff to use new technologies, as much as wider ethical considerations.

“Unless we have the legal requirement for companies to abide by the law and do certain things, it is highly unlikely that they will engage in [ethical] behavior”

Ganna Pogrebna, Lead for Behavioral Data Science, The Alan Turing Institute

Our findings reveal no consensus about what a company’s ethical responsibilities are regarding AI systems that may impact people’s livelihoods or cause injury or death. However, the high number of respondents saying they have no responsibilities beyond regulatory compliance with respect to these systems may be partly a reflection of how few businesses are using AI for these purposes.

Above all, our findings highlight just how much work is left to be done to establish global principles to ensure enterprises use AI systems ethically and responsibly, and guard against the reputational risks that come with misuse.

For Abercrombie, the key message she wants enterprises to take away is that things cannot be business as usual when it comes to AI ethics.

“I recommend that every company assess the level of harm that could potentially come with deploying an AI system, versus the level of good that could potentially come,” she says.

“The very first thing I tell groups that are dealing with [the riskiest types of AI] is, you need to have a full vetting process before you release those to the wild,” she adds. “This cannot just be your typical case of, ‘Let's release an MVP [minimum viable product] and see how it goes.’”

This is an excerpt from our new research report, The State of Responsible AI: 2021. To download your copy of the full research, click here now.