Insights on Designing Data Systems for Flexibility and Repurposing

A costly failure at one federal agency and a pitch-perfect strategy at another offer lessons on achieving true interoperability

By Corinium Global Intelligence

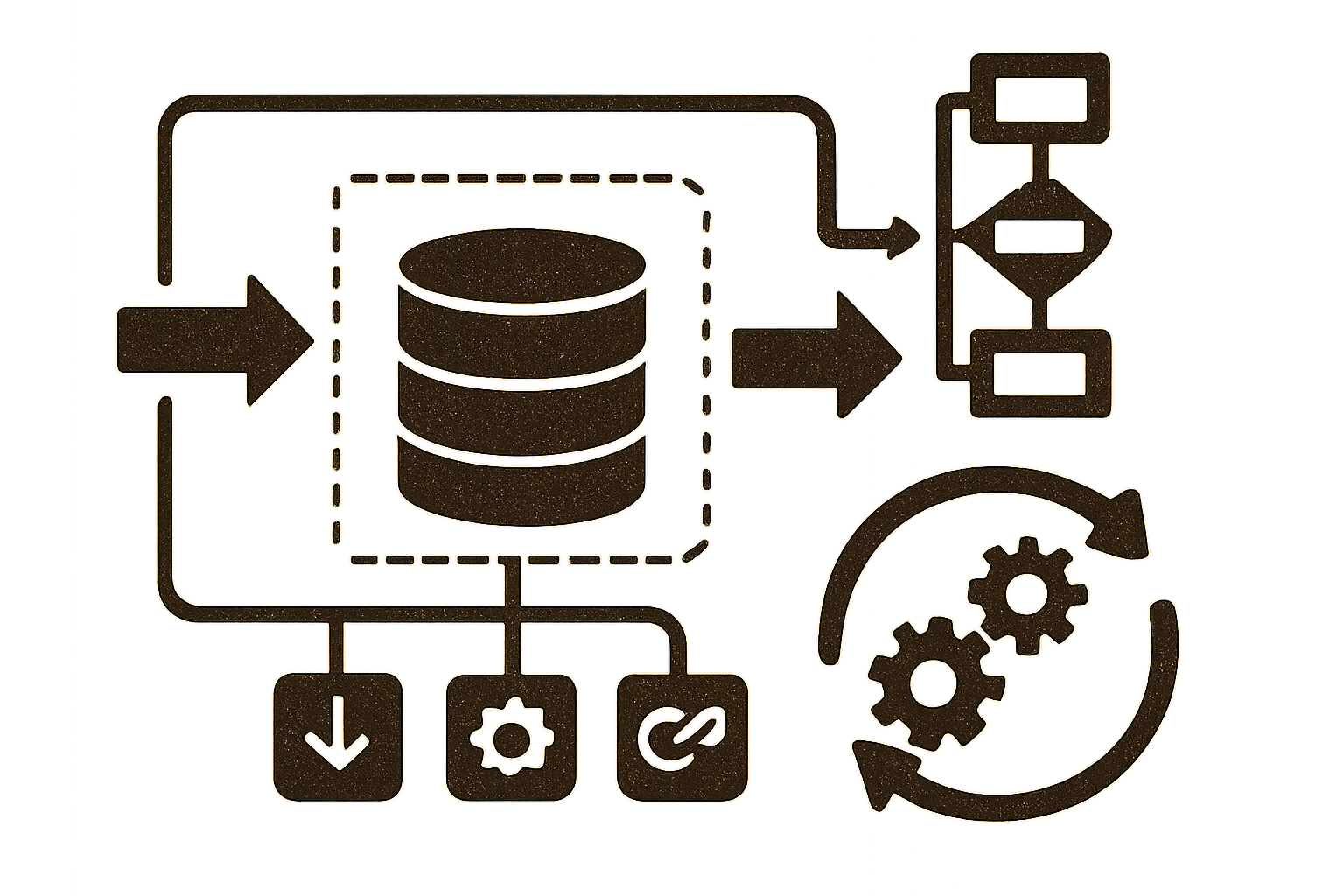

Modern technology investments must prioritize resilience and adaptability, allowing agencies to pivot their data capabilities quickly to meet emerging needs without rebuilding foundational components. The data cleaning, pipelining, and infrastructure work you invest in today can be redirected to new problems tomorrow.

AI and data systems built with fungibility in mind allow organizations to shift directions by simply feeding models new data inputs rather than starting over. This agility shortens timelines for new use cases and maximizes the value of existing investments.

To achieve true agility, ensure that documentation is not an afterthought, but an integrated part of the system itself.

These are some of the takeaways of a new whitepaper from Corinium and data.world.

Patrick McGarry, Federal Chief Data Officer at ServiceNow, which recently acquired data.world, says these takeaways are especially important for federal agencies, where embedding documentation directly into the architecture allows users, auditors, and automated systems alike to understand how tools were designed, configured, and deployed within specific mission contexts.

“We need to support reproducibility, auditability and adaptive governance across teams, tools and clouds, and make sure we are evolving to match the capabilities of our system,” he says.

Lessons from federal broadband expansion

In the early 2010s, federal efforts to expand broadband were repeatedly stalled because different agencies used conflicting, incomplete, or outdated data to define coverage areas. Billions in funding was at risk of duplication or gaps, simply because no one could trace or verify the origin or quality of the data they relied on.

A lack of traceability is not just a compliance gap, it's a mission risk. If an AI system’s output is challenged in court, in Congress, or by the public, many agencies can’t defend or reproduce how the decision was made.

Without this provenance trail, agencies will struggle to fulfill basic elements of trustworthy AI oversight, especially under OMB M-24-10 and EO 14110.

“There is a reason I harp on interoperability as a key concept,” says McGarry.

Sustain trust without slowing innovation

Embedding human judgment at key points in AI and data workflows reinforces trust and ensures responsible oversight. These touchpoints need not hinder progress. Often, it’s enough for someone to “poke their head in” and validate a decision.

This practice cultivates a culture of shared responsibility around data and automation. At the same time, data literacy and upskilling the team will empower a more informed, data-driven workforce.

But that workforce needs usable tools. A dashboard alone doesn’t create insight; to truly benefit from data tools, users need the ability to explore definitions, understand inputs, and trace how metrics are derived. Don’t overlook the value of good UX. Making information easily accessible – meaning clickable, contextual, and self-service – helps demystify data for users.

“You want to start bubbling things to the surface like: Can I click into this term on the dashboard and understand what it means? What are the data inputs that are feeding this dashboard?” says McGarry. “The more you provide these things for people to be able to self service and self educate, the more it continues to draw them deeper into the data conversation.”

Case study: how the CFPB built a trusted data foundation

The Consumer Financial Protection Bureau (CFPB) has quietly become a standout example of what modern data governance can look like. Over the past two years, it has transformed how it manages data via three major initiatives.

“The CFPB isn’t just checking boxes,” says McGarry. “They’re building the systems and culture that can support responsible AI, evidence-based policymaking, and a more transparent government.”

The Bureau developed an Enterprise Data Strategy that acted as a living blueprint, not just a document. It had executive-level buy-in and clear accountability, which drove data conversations at the highest levels.

It also overhauled its data intake and disclosure processes. This wasn’t just about policy, it was operational. It automated workflows, documented timelines, and brought together key stakeholders from Legal, Privacy, FOIA, Cyber, and beyond. The result is a governance structure that’s both streamlined and defensible, two things every agency needs as it faces increasing scrutiny over AI data use.

Finally, it replaced a legacy tool with a modern data catalog that powers its stewardship program, supports taxonomy alignment, enables automation for access controls, and even provides public-facing engagement channels.

“This is a roadmap worth studying,” says McGarry.

For more actionable takeaways to help you implement resilient data and AI strategies, download our whitepaper here.