As a machine learning practitioner, there was one graphic that stayed with me more than any other in 2016, and not because it delighted me. The Gartner Hype Cycle for Emerging Technologies 2016 was trending on social media. Every technology wonk, people whom I greatly respect, was posting, commenting and retweeting that graphic. I couldn’t help but notice that my field of work, machine learning, was perched, ingloriously, at the very pinnacle of the cycle.

At the time that it seemed at least one out of every ten entries on my Twitter feed made reference to this ignominious infographic, I was working for several companies, implementing these self-same technologies in their enterprises and growing almost giddy at the ease with which we were able to do things faster and better. I was left with a feeling of great unease that, like all episodes of cognitive dissonance, spurs one on to resolve the contradiction. It seemed my experience was at odds with that of the people in the know.

In resolving this seeming paradox, I came to the conclusion that two very different technological perspectives are at play here. They conspire to create what amounts to a disjointed view, of a key technology, that threatens to leave enterprises literally behind the curve.

Force Multipliers

There are several forces aiding and abetting this divergence. Firstly, big data, in all it dimensions (amount of data), speed of data, and range of data types and sources), has created fodder for training machine learning algorithms like never before. Secondly, computational power and cloud computing, especially using graphics processing units (GPUs), have largely beaten back the problem of the “combinatorial explosion”, which made certain algorithm unworkable. Thirdly, data science has become a hot career choice, widely accessible through MOOCs, which has somewhat relieved the pressure on companies to find good hires in the field. Fourthly, companies like Google, Facebook, and the Chinese firm Baidu have established very strong research units, e.g. Google DeepMind, Facebook AI Research and Andrew Ng’s team at Baidu.

The wresting away of research leadership from educational institutions by large corporates has been an interesting development, and somewhat unforeseen. Large corporations have realized that to get the very best talent, they needed to not just provide top salaries, but opportunities to engage in groundbreaking research. The extent to which the center of mass has shifted is evidenced by Yann LeCun, head of Facebook AI Research, delivering the keynote address at NIPS (the premier machine learning and computational neuroscience conference) in December of 2016. NIPS 2016 had more than 5,000 attendees, and unheard of number in the industry.

Finally, there has been a tidal shift towards open source software within the machine learning movement. Google open sourced it’s Tensorflow system in November 2015. Since then it has seen phenomenal growth and a torrent of contributions from the community. Other frameworks, like Theano, Caffe, Torch and MXnet, already enjoyed support within the open source community. Apart from that, the most popular languages to implement these frameworks (e.g. Python) are open source.

The Nadir of the AI Movement

Engaged in all these frenzied activities, one can be forgiven for forgetting a second perspective with a much more jaundiced eye. This perspective was born out of the so-called AI winter. By most accounts, the nadir of the AI movement came in 1973 with the publication of the Lighthill report. The report was deeply skeptical of AI to deliver on its grandiose promises, and led to funding being cut for most of the artificial intelligence projects in the United Kingdom. It had a knock-on effect, with similar results around the globe. Academics became fearful of calling themselves AI researchers, should they be labelled as fanciful or eccentric. AI continued to live on under the guise of “informatics”, “machine learning”, or even “cognitive systems”.

Even as artificial intelligence research took on a decidedly clandestine character, a very different development occurred. Sometimes referred to as the Lotus revolution, the launch of Lotus 1–2–3 in the early eighties, changed the way industry conducted itself. Financial modeling went mainstream. Enterprises started looking towards proprietary productivity tools for an advantage. In recent years, the promises of these tools have themselves proven to be illusory, as we’ve seen productivity growth stagnate.

Artificial Intelligence in 2017

What, then, is a realistic view of artificial intelligence in 2017. The truth is that we have not attained what practitioners call artificial general intelligence. There are no truly intelligent bots out there. Beating opponents at chess or Go, is not what we have in mind when we talk about AI.

Having said that, machine learning has made tremendous progress. We can now use our algorithms to optimize call centers, drive cars, fly drones, manage our security, recommend what to read, watch, buy and listen to. Machine learning algorithms outperform physicians at diagnosis, predict epidemics, and lower your insurance premiums, without ever achieving the Hollywood version of artificial intelligence, because they know more (they have vastly more data) and they compute far better then the human brain ever will.

AI did not disappear during the AI Winter, it just went silent. It’s development continued, and is now accelerating beyond our wildest expectations because of industry and open source working together. The AI Winter is over.

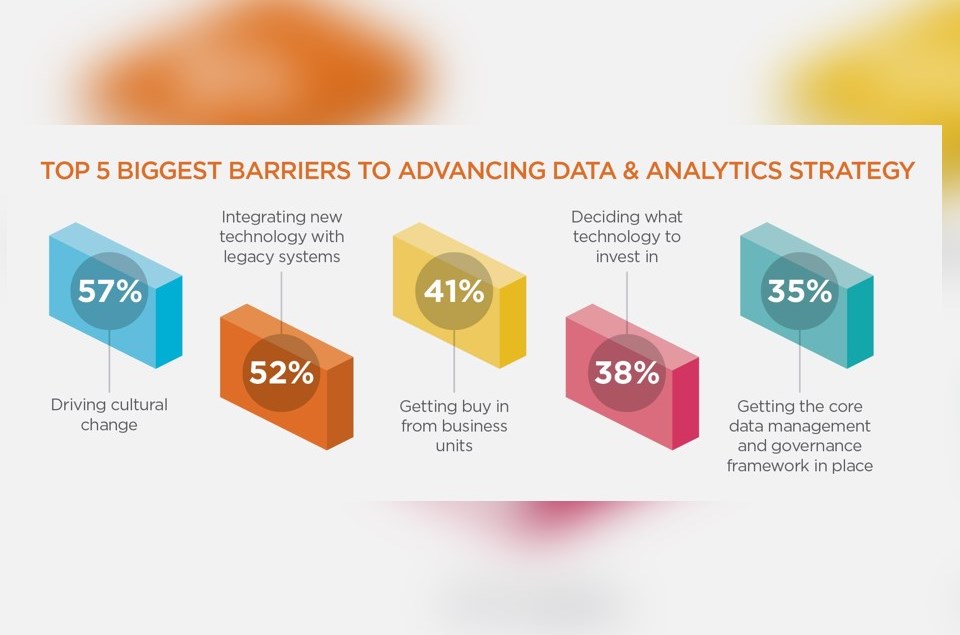

The danger to business leaders is that their reliance on proprietary and legacy systems within their enterprises, and the profound shift in thinking required towards machine learning, will leave them paralyzed to take advantage of the bonanza machine learning is about to deliver. As Andrew Ng is fond of saying, “AI is the next electricity”, and that might not be hype.

(image credits: Gartner)

***

Join us at the Chief Data & Analytics Officer Africa happening on July 3-5, 2017. For more information, visit www.coriniumintelligence.com/chiefdatanalyticsofficerafrica

By Raymond Ellis:

Raymond Ellis is the Principal Data Scientist at Igel und Fuchs. Connect with him on LinkedIn

Save